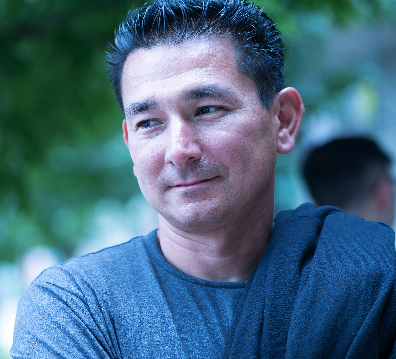

This was my first time at the RTC Conference at Illinois Institute of Technology in Chicago, and I was blown away by the caliber of talks at the event. They ranged from very academic and theoretical to extremely practical, capturing what is happening on the ground today in real-time communications. The mix of attendees was one of the most diverse I have ever seen, which included seasoned professionals in their respective fields to bright and inquisitive minds from IIT starting their careers in the workforce. I was fortunate to have some fantastic conversations while I was here, which I will get to at the end of this post, but this was a mind-opening experience that I am grateful to have received.

My journey to Chicago included presenting two sessions at the conference. The first was titled “Enhancing Real-Time WebRTC Conversation Understanding Using ChatGPT” and the second was “Edge Devices as Interactive Personal Assistants: Unleashing the Power of Generative AI Agents”. The talks each had very different goals in what they were trying to achieve. Based on the feedback and number of questions that I got afterward in the hallway, they were very well received. Attendees got a glimpse into some unique and thought-provoking possibilities they could take home to explore.

Enhancing Real-Time WebRTC Conversation Understanding Using ChatGPT

The upshot of this session was using Generative AI and Large Language Models (LLMs) to influence conversations in real time. The backdrop was using WebRTC as a protocol and platform to host our conversation, but in reality, any medium conducive to carrying a conversation would suffice. However, WebRTC provides an ideal environment as it’s an open standard, and every modern browser has the capability of supporting these voice/video communications.

Large Language Models and Conversation AIs, like ChatGPT, for the first time, have enabled us to influence the conversation on these platforms because they seamlessly participate in conversations and provide relevant contributions to the conversations being had. That’s the key to why this is a “thing” today. These AIs can be proactive in conversation and not just react to them.

If you are interested in learning more and seeing a really cool demo showcasing this in action, look at the recording above. The demo was definitely a crowd-pleaser since we got to highlight two powerful concepts: AI can retain the history of the conversation taking place and meaningfully participate to influence the simulated conversation for the demo.

All of these resources, links to articles mentioned, and open source projects used in this presentation can be found in the slides. There are also instructions on how to reproduce the demo within this talk.

Edge Devices as Interactive Personal Assistants: Unleashing the Power of Generative AI Agents

My second session focused on Autonomous AI Agents. This might be an unfamiliar topic to some, but we have all heard about them interacting in the real world. Unlike Siri and Alexa, which focus on a single transactional question and response, these are processes where AI models can create their own sub-tasks for problems that need more refinement or detail that a single answer might not be able to answer sufficiently. Without these Autonomous Agents, we typically achieve this refinement by asking the AI ourselves to drill down into a problem further. In this case, the autonomous agent process provides its own questions to seek out the details of the answer.

Since these LLMs are getting smarter and consume fewer resources than previous generations, they can live on IoT (Internet of Things) and Edge devices for the first time thanks to devices getting denser with more hardware capabilities and resources. This talk focuses on different architectures that can be used to land these Autonomous Agents on these IoT/Edge devices to focus on jobs that could run for many minutes to multiple days. The trade-off in these cases is answers with concentrated amounts of knowledge and a more thorough response versus the speed of the reply.

Take a look at the recording of the session above. There is also a demo at the end of this presentation that serves as a proof-of-concept to demonstrate what could be done with these Autonomous AI Agents using an open source project I wrote called Open Virtual Assistant. The demo highlights exercising the “memory” for these Agents (via their vector database) and how one might launch these agents within an IoT or Edge device.

Again all of these resources, links to articles mentioned, and open source projects used in this presentation can be found in the slides with instructions on reproducing the demo within this talk.

Looking Back… Personal Reflections

My most memorable parts of the conference were the conversations with other attendees. The last time I attended a conference was in the second half of 2022, just before ChatGPT blew up in the media. Oh, how times have changed! Besides a good number of the talks focusing on ChatGPT or AI in general, the buzz was definitely in the air and dominated most of the chatter in the hallways. I love tech talk, but I might love these philosophical, what-if, and future-predicting conversations even more.

One of the top questions I get asked frequently as someone who works in the AI/ML is, “Will humans lose our jobs to AI?”. I was also asked this while at the conference. My response is always yes and no. Yes, certain jobs will likely become obsolete… and No, there will always be jobs out there since we haven’t solved all the problems contained within our existence. There will always be jobs, but they might look completely different from what they are today.

An example I always like to give is the calculator and personal computer. When the calculator became mainstream, did all accounting or anything related to math disappear? The answer is definitely No. Society then created far more advanced “calculators” in the form of personal computers that do a bunch of repetitive tasks and naturally transitioned into automation. This is the automation of building cars, canning foods, or mass-producing clothing or shoes. This automation obsoleted people canning foods but created new jobs to develop and maintain these new automation systems.

Everyone recognizes the potential for Artificial Intelligence as the next significant disruptor to humanity. My advice is that just like the calculator or computer, it’s best to understand and know how to use these systems regardless of your field. Those who know how to leverage AI will outshine those who don’t. Finally, when there isn’t a need for a door-to-door encyclopedia salesperson, it’s best to objectively recognize the change in tides and learn something new to transition to. That’s my long-winded answer.

I had a great time at RTC Conference at IIT, and if you are a person like me who loves to learn new and exciting topics, technologies, and ideas, then this is a great place to expand your mind. I highly recommend going and hope I am lucky enough to be working on something compelling enough to share with others next year. Cheers!